The IT research firm, Gartner, Inc. has released its February 2016 report, Magic Quadrant for Advanced Analytics Platforms. The report’s main graph shows the completeness of each company’s vision plotted against its ability to achieve that vision (Figure 1.) I include this plot each year in my continuously updated article, The Popularity of Data Analysis Software, along with a brief summary of its major points. The full report is always interesting reading and, if you act fast, you can download it free from RapidMiner’s web site.

Figure 1. Gartner Magic Quadrant for 2016. What’s missing?

If you compare Figure 1 to last year’s plot (Figure 2), you’ll see a few noteworthy changes, but you’re unlikely to catch the radical shift that has occurred between the two. Both KNIME and RapidMiner have increased their scores slightly in both dimensions. KNIME is now rated as having the greatest vision within the Leaders quadrant. Given how much smaller KNIME Inc. is than IBM and SAS Institute, that’s quite an accomplishment. Dell has joined them in the Leaders quadrant through its acquisition of Statistica. Microsoft increased its completeness of vison, in part by buying Revolution Analytics. Accenture joined the category through its acquisition of i4C Analytics. LavaStorm and Megaputer entered the plot in 2016, though Gartner doesn’t specify why. These are all interesting changes, but they don’t represent the biggest change of all.

The watershed change between these two plots is hinted at by two companies that are missing in the more recent one: Salford Systems and Tibco. The important thing is why they’re missing. Gartner excluded them this year, “…due to not satisfying the [new] visual composition framework [VCF] inclusion criteria.” VCF is the term they’re using to describe the workflow (also called streams or flowcharts) style of Graphical User Interface (GUI). To be included in the 2016 plot, companies must have offered software that uses the workflow GUI. What Garter is saying is, in essence, advanced analytics software that does not use the workflow interface is not worth following!

Figure 2. Gartner Magic Quadrant for 2015.

Though the VCF terminology is new, I’ve long advocated its advantages (see What’s Missing From R). As I described there:

“While menu-driven interfaces such as R Commander, Deducer or SPSS are somewhat easier to learn, the flowchart interface has two important advantages. First, you can often get a grasp of the big picture as you see steps such as separate files merging into one, or several analyses coming out of a particular data set. Second, and more important, you have a precise record of every step in your analysis. This allows you to repeat an analysis simply by changing the data inputs. Instead, menu-driven interfaces require that you switch to the programs that they create in the background if you need to automatically re-run many previous steps. That’s fine if you’re a programmer, but if you were a good programmer, you probably would not have been using that type of interface in the first place!”

As a programming-oriented consultant who works with many GUI-oriented clients, I also appreciate the blend of capabilities that workflow GUIs provide. My clients can set up the level of analysis they’re comfortable with, and if I need to add some custom programming, I can do so in R or Python, blending my code right into their workflow. We can collaborate, each using his or her preferred approach. If my code is widely applicable, I can put it into distribution as a node icon that anyone can drag into their workflow diagram.

The Gartner report offers a more detailed list of workflow features. They state that such interfaces should support:

- Interactive design of workflows from data sources to visualization, modeling and deployment using dragging and dropping of building blocks on a visual pallet

- Ability to parameterize the building blocks

- Ability to save workflows into files and libraries for later reuse

- Creation of new building blocks by composing sets of building blocks

- Creation of new building blocks by allowing a scripting language (R, JavaScript, Python and others) to describe the functionality of the input/output behavior

I would add the ability to color-code and label sections of the workflow diagram. That, combined with the creation of metanodes or supernodes (creating one new building block from a set of others) help keep a complex workflow readable.

Implications

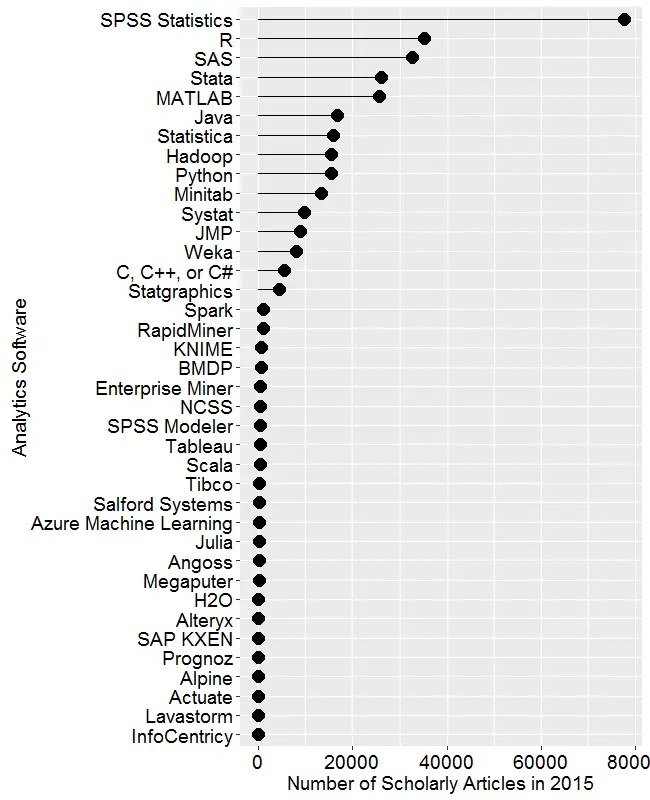

If Gartner’s shift in perspective resulted in them dropping only two companies from their reports, does this shift really amount to much of a change? Hasn’t it already been well noted and dealt with? No, the plot is done at the company level. If it were done at the product level, many popular packages such as SAS (with its default Display Manager System interface) and SPSS Statistics would be excluded.

The fields of statistics, machine learning, and artificial intelligence have been combined psychologically by their inclusion into broader concepts such as advanced analytics or data science. But the separation of those fields is still quite apparent in the software tools themselves. Tools that have their historical roots in machine learning and artificial intelligence are far more likely to have implemented workflow GUIs. However, while they have a more useful GUI, they tend to still lack a full array of common statistical methods. For example, KNIME and RapidMiner can only handle very simple analysis of variance problems. When such companies turn their attention to this deficit, the more statistically oriented companies will face much stiffer completion. Recent versions of KNIME have already made progress on this front.

SPSS Modeler can access the full array of SPSS Statistics routines through its dialog boxes, but the two products lack full integration. Most users of SPSS Statistics are unaware that IBM offers control of their software through a better interface. IBM could integrate the Modeler interface into SPSS Statistics so that all its users would see that interface when they start the software. Making their standard menu choices could begin building a workflow diagram. SPSS Modeler could still be sold as a separate package, one that added features to SPSS Statistics’ workflow interface.

A company that is on the cutting edge of GUI design is SAS Institute. Their SAS Studio is, to the best of my knowledge, unique in its ability to offer four major ways of working. Its program editor lets you type code from memory using features far advanced from their aging Display Manager System. It also offers a “snippets” feature that lets you call up code templates for common tasks and edit them before execution. That still requires some programming knowledge, but users can depend less on their memory. The software also has a menu & dialog approach like SPSS Statistics, and it even has a workflow interface. Kudos to SAS Institute for providing so much flexibility! When students download the SAS University Edition directly from SAS Institute, this is the only interface they see.

SAS Studio currently supports a small, but very useful, percent of SAS’ overall capability. That needs to be expanded to provide as close to 100% coverage as possible. If the company can eventually phase out their many other GUIs (Enterprise Guide, Enterprise Miner, SAS/Assist, Display Manager System, SAS/IML Studio, etc.), merging that capability into SAS Studio, they might finally earn a reputation for ease of use that they have lacked.

In conclusion, the workflow GUI has already become a major type of interface for advanced analytics. My hat is off to the Gartner Group for taking a stand on encouraging its use. In the coming years, we can expect to see the machine learning/AI software adding statistical features, and the statistically oriented companies continuing to add more to their workflow capabilities until the two groups meet in the middle. The companies that get there first will have a significant strategic advantage.

Acknowledgements

Thanks to Jon Peck for suggestions that improved this post.